Keeping up with an industry as fast-moving as AI is a tall order. So until an AI can do it for you, here’s a handy roundup of recent stories in the world of machine learning, along with notable research and experiments we didn’t cover on their own.

This week, SpeedyBrand, a company using generative AI to create SEO-optimized content, emerged from stealth with backing from Y Combinator. It hasn’t attracted a lot of funding yet ($2.5 million), and its customer base is relatively small (about 50 brands). But it got me thinking about how generative AI is beginning to change the makeup of the web.

As The Verge’s James Vincent wrote in a recent piece, generative AI models are making it cheaper and easier to generate lower-quality content. Newsguard, a company that provides tools for vetting news sources, has exposed hundreds of ad-supported sites with generic-sounding names featuring misinformation created with generative AI.

It’s causing a problem for advertisers. Many of the sites spotlighted by Newsguard seem exclusively built to abuse programmatic advertising, or the automated systems for putting ads on pages. In its report, Newsguard found close to 400 instances of ads from 141 major brands that appeared on 55 of the junk news sites.

It’s not just advertisers who should be worried. As Gizmodo’s Kyle Barr points out, it might just take one AI-generated article to drive mountains of engagement. And even if every AI-generated article only generates a few dollars, that’s less than the cost of generating the text in the first place — and potential advertising money not being sent to legitimate sites.

So what’s the solution? Is there one? It’s a pair of questions that’s increasingly keeping me up at night. Barr suggests it’s incumbent on search engines and ad platforms to exercise a tighter grip and punish the bad actors embracing generative AI. But given how fast the field is moving — and the infinitely scalable nature of generative AI — I’m not convinced that they can keep up.

Of course, spammy content isn’t a new phenomenon, and there’s been waves before. The web has adapted. What’s different this time is that the barrier to entry is dramatically low — both in terms of the cost and time that has to be invested.

Vincent strikes an optimistic tone, implying that if the web is eventually overrun with AI junk, it could spur the development of better-funded platforms. I’m not so sure. What’s not in doubt, though, is that we’re at an inflection point, and that the decisions made now around generative AI and its outputs will impact the function of the web for some time to come.

Here are other AI stories of note from the past few days:

OpenAI officially launches GPT-4: OpenAI this week announced the general availability of GPT-4, its latest text-generating model, through its paid API. GPT-4 can generate text (including code) and accept image and text inputs — an improvement over GPT-3.5, its predecessor, which only accepted text — and performs at “human level” on various professional and academic benchmarks. But it’s not perfect, as we note in our previous coverage. (Meanwhile ChatGPT adoption is reported to be down, but we’ll see.)

Bringing ‘superintelligent’ AI under control: In other OpenAI news, the company is forming a new team led by Ilya Sutskever, its chief scientist and one of OpenAI’s co-founders, to develop ways to steer and control “superintelligent” AI systems.

Anti-bias law for NYC: After months of delays, New York City this week began enforcing a law that requires employers using algorithms to recruit, hire or promote employees to submit those algorithms for an independent audit — and make the results public.

Valve tacitly greenlights AI-generated games: Valve issued a rare statement after claims it was rejecting games with AI-generated assets from its Steam games store. The notoriously close-lipped developer said its policy was evolving and not a stand against AI.

Humane unveils the Ai Pin: Humane, the startup launched by ex-Apple design and engineering duo Imran Chaudhri and Bethany Bongiorno, this week revealed details about its first product: The Ai Pin. As it turns out, Humane’s product is a wearable gadget with a projected display and AI-powered features — like a futuristic smartphone, but in a vastly different form factor.

Warnings over EU AI regulation: Major tech founders, CEOs, VCs and industry giants across Europe signed an open letter to the EU Commission this week, warning that Europe could miss out on the generative AI revolution if the EU passes laws stifling innovation.

Deepfake scam makes the rounds: Check out this clip of U.K. consumer finance champion Martin Lewis apparently shilling an investment opportunity backed by Elon Musk. Seems normal, right? Not exactly. It’s an AI-generated deepfake — and potentially a glimpse of the AI-generated misery fast accelerating onto our screens.

AI-powered sex toys: Lovense — perhaps best known for its remote-controllable sex toys — this week announced its ChatGPT Pleasure Companion. Launched in beta in the company’s remote control app, the “Advanced Lovense ChatGPT Pleasure Companion” invites you to indulge in juicy and erotic stories that the Companion creates based on your selected topic.

Other machine learnings

Our research roundup commences with two very different projects from ETH Zurich. First is aiEndoscopic, a smart intubation spinoff. Intubation is necessary for a patient’s survival in many circumstances, but it’s a tricky manual procedure usually performed by specialists. The intuBot uses computer vision to recognize and respond to a live feed from the mouth and throat, guiding and correcting the position of the endoscope. This could allow people to safely intubate when needed rather than waiting on the specialist, potentially saving lives.

Here’s them explaining it in a little more detail:

In a totally different domain, ETH Zurich researchers also contributed second-hand to a Pixar movie by pioneering the technology needed to animate smoke and fire without falling prey to the fractal complexity of fluid dynamics. Their approach was noticed and built on by Disney and Pixar for the film Elemental. Interestingly, it’s not so much a simulation solution as a style transfer one — a clever and apparently quite valuable shortcut. (Image up top is from this.)

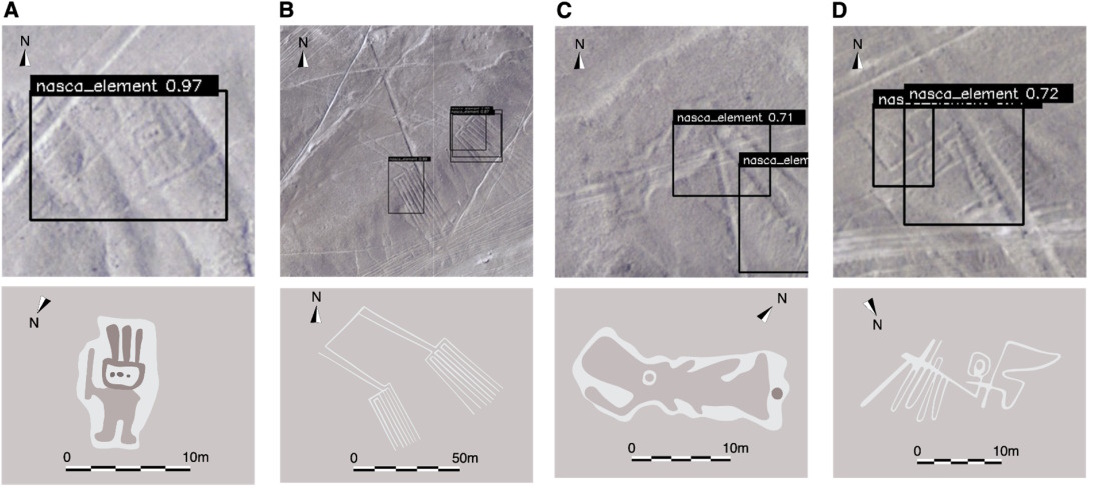

AI in nature is always interesting, but nature AI as applied to archaeology is even more so. Research led by Yamagata University aimed to identify new Nasca lines — the enormous “geoglyphs” in Peru. You might think that, being visible from orbit, they’d be pretty obvious — but erosion and tree cover from the millennia since these mysterious formations were created mean there are an unknown number hiding just out of sight. After being trained on aerial imagery of known and obscured geoglyphs, a deep learning model was set free on other views, and amazingly it detected at least four new ones, as you can see below. Pretty exciting!

Four Nasca geoglyphs newly discovered by an AI agent.

In a more immediately relevant sense, AI-adjacent tech is always finding new work detecting and predicting natural disasters. Stanford engineers are putting together data to train future wildfire prediction models with by performing simulations of heated air above a forest canopy in a 30-foot water tank. If we’re to model the physics of flames and embers traveling outside the bounds of a wildfire, we’ll need to understand them better, and this team is doing what they can to approximate that.

At UCLA they’re looking into how to predict landslides, which are more common as fires and other environmental factors change. But while AI has already been used to predict them with some success, it doesn’t “show its work,” meaning a prediction doesn’t explain whether it’s because of erosion, or a water table shifting, or tectonic activity. A new “superposable neural network” approach has the layers of the network using different data but running in parallel rather than all together, letting the output be a little more specific in which variables led to increased risk. It’s also way more efficient.

Google is looking at an interesting challenge: how do you get a machine learning system to learn from dangerous knowledge yet not propagate it? For instance, if its training set includes the recipe for napalm, you don’t want it to repeat it — but in order to know not to repeat it, it needs to know what it’s not repeating. A paradox! So the tech giant is looking for a method of “machine unlearning” that lets this sort of balancing act occur safely and reliably.

If you’re looking for a deeper look at why people seem to trust AI models for no good reason, look no further than this Science editorial by Celeste Kidd (UC Berkeley) and Abeba Birhane (Mozilla). It gets into the psychological underpinnings of trust and authority and shows how current AI agents basically use those as springboards to escalate their own worth. It’s a really interesting article if you want to sound smart this weekend.

Though we often hear about the infamous Mechanical Turk fake chess-playing machine, that charade did inspire people to create what it pretended to be. IEEE Spectrum has a fascinating story about the Spanish physicist and engineer Torres Quevedo, who created an actual mechanical chess player. Its capabilities were limited, but that’s how you know it was real. Some even propose that his chess machine was the first “computer game.” Food for thought.

The week in AI: Generative AI spams up the web by Kyle Wiggers originally published on TechCrunch

DUOS