Another bit of self-reporting from Meta today vis-a-vis internal efforts to identify and shut down political disinformation networks operating on its social platforms (aka “coordinated, inauthentic behavior” — or CIB — as it prefers to term it).

Specifically, it’s reported taking down two, separate political disinformation networks — one “small” network originating from China which it says had a few distinct but short term political targets between the fall of 2021 and mid-September 2022, including both sides in the US midterms, and attacks on the Czech Republic’s government’s support for Ukraine; and a second, larger network that Meta identifies as Russian and says started operating in May this year distributing anti-Ukraine content across a number of European countries — including Germany, France, Italy the UK and Ukraine itself.

The Chinese network is notable in being the first Meta has reported targeting the US midterms, although — per its description — the disinformation operation appeared to have a variety of different targets. It also does not sound very successful in engaging Meta users, according to the company’s assessment.

“In the United States, it targeted people on both sides of the political spectrum; in Czechia, this activity was primarily anti-government, criticizing the state’s support of Ukraine in the war with Russia and its impact on the Czech economy, using the criticism to caution against antagonizing China,” Meta writes of the Chinese network in a newsroom post authored by Ben Nimmo, its global threat intelligence lead (formerly of the social media analytics firm Graphika) and David Agranovich, director, threat disruption.

“Each cluster of accounts — around half a dozen each — posted content at low volumes during working hours in China rather than when their target audiences would typically be awake. Few people engaged with it and some of those who did called it out as fake. Our automated systems took down a number of accounts and Facebook Pages for various Community Standards violations, including impersonation and inauthenticity.”

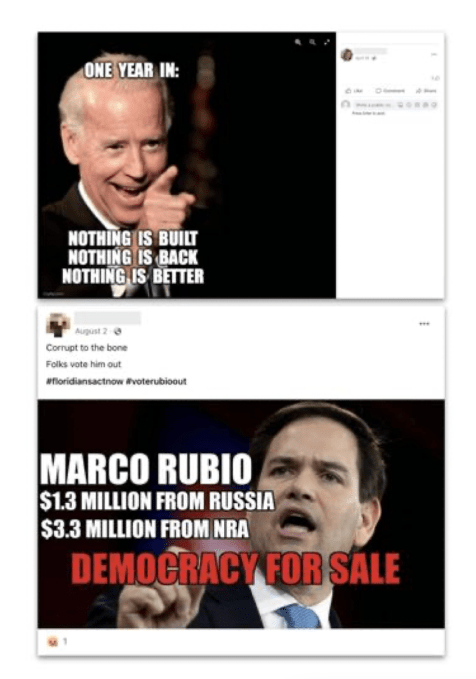

Examples of inauthentic content from Meta’s report which originated with the China network targeting US users (Screengrab: Natasha Lomas/TechCrunch)

The Russian network is described by Meta as “the largest and most complex Russian-origin operation that we’ve disrupted since the beginning of the war in Ukraine” — and said to be featuring “an unusual combination of sophistication and brute force” — which suggests the disinformation effort was a concerted one.

“The operation began in May of this year and centered around a sprawling network of over 60 websites carefully impersonating legitimate websites of news organizations in Europe, including Spiegel, The Guardian and Bild,” Meta writes, explaining that the modus operandi was to post original articles which criticized Ukraine and Ukrainian refugees, were pro-Russia and argued that Western sanctions on the country would backfire.

“They would then promote these articles and also original memes and YouTube videos across many internet services, including Facebook, Instagram, Telegram, Twitter, petitions websites Change.org and Avaaz, and even LiveJournal,” it went on. “Throughout our investigation, as we blocked this operation’s domains, they attempted to set up new websites, suggesting persistence and continuous investment in this activity across the internet. They operated primarily in English, French, German, Italian, Spanish, Russian and Ukrainian. On a few occasions, the operation’s content was amplified by the Facebook Pages of Russian embassies in Europe and Asia.”

“Together, these two approaches worked as an attempted smash-and-grab against the information environment, rather than a serious effort to occupy it long-term,” Meta adds.

The combination of spoofed websites plus multiple languages required “both technical and linguistic investment”, in its assessment, but it says the social media amplification operation was less slick — relying “primarily” on “crude” ads and fake accounts.

“From the start, the operation built mini-brands across the internet, using them to post, re-share and like anti-Ukraine content in different languages. They would create same-name accounts on different platforms that served as backstops for one another to make them appear more legitimate and to amplify each other. It worked as a fake engagement carousel, with multiple layers of fake entities boosting each other, creating their own echo chamber,” Meta also writes in a fuller report of the Russia disops.

The tech giant says “many” of the accounts involved in this network were “routinely” detected and taken down for inauthenticity by its automated systems — although it does not offer a more specific breakdown of the success rate of its AI than saying a “majority” of accounts, Pages and ads were detected and removed in this way before it even began its investigation.

Meta’s newsroom post says it was alerted to the CIB activity in part by investigative journalism in Germany — also crediting independent researchers at the Digital Forensics Research Lab from picking up on the disinformation operation. And it notes that its reports includes a list of domains, petitions and Telegram channels it has assessed as being connected to the operation “to support further research into this and similar cross-internet activities”.

The disclosures are just the latest on coordinated inauthentic behavior takedowns from Meta which regularly publishes reports on actions it’s taken, after the fact, to identify and remove disinformation operations — recently revealing it had acted against a pro-US influence operation, for example. Or reporting a wave of removals of suspicious activity in Iran back in 2019.

However Meta makes these disclosures this without providing a comprehensive overview of the total volume of fake accounts and activity on its platforms, making such reports (and their relative significance) hard to quantify.

And while disclosures are clearly important to further additional research into specific disinformation networks and tactics (which may survive even after a specific network has been weeded out), partial transparency does risk presenting a skewed view — potentially implying greater success at purging fake activity than is happening in actuality — so the strongest function of the self-reporting may be in generating publicity for self-serving claims by Meta to be paying close and comprehensive attention to platform safety.

Other views on the comprehensiveness of its counter-manipulation efforts are of course available — such as those expressed by Facebook/Meta whistleblower, Sophie Zhang, whose leaked memo two years ago accused the company of concentrating resources on politically expedient areas, and those likely to garner it the most PR, while paying little mind to large-scale manipulation efforts going on elsewhere, despite her attempts to drum up internal interest in taking action.

Meta’s self-styled “detailed report” of the China and Russia network takedowns includes just a handful of caveated metrics: It says the Chinese network’s presence on Facebook and Instagram stretched to 81 Facebook accounts, eight Pages, one Group and two accounts on Instagram; while it says “about 20″ accounts followed one or more of these Pages; “around 250” accounts joined one or more of these Groups; and “less than 10 accounts” followed one or more of these Instagram accounts.

While the Russian network sprawled to 1,633 Facebook accounts, 703 Pages, one Group and 29 Instagram accounts — with Meta saying “about 4,000” accounts followed one or more of these Pages, “less than 10” accounts joined the Group and “about 1,500” accounts followed one or more of the Instagram accounts.

The report also includes a disclosure that the Russian disops effort racked up spending of “around $105,000” on ads on Facebook and Instagram — which Meta specifies were “primarily” paid for in US dollars and euros. (Infamously, some of the Russian ads targeting the 2016 US election were paid for in roubles.)

That level of ad spend compares to the scale of political ad spending Facebook disclosed back in 2017 as having been linked to Russia’s targeting of the 2016 US election.

Meta reports takedowns of influence ops targeting US midterms, Ukraine war by Natasha Lomas originally published on TechCrunch

DUOS