Keeping up with an industry as fast-moving as AI is a tall order. So until an AI can do it for you, here’s a handy roundup of the last week’s stories in the world of machine learning, along with notable research and experiments we didn’t cover on their own.

One story that caught this reporter’s attention this week was this report showing that ChatGPT seemingly repeats more inaccurate information in Chinese dialects than when asked to do so in English. This isn’t terribly surprising — after all, ChatGPT is only a statistical model, and it simply draws on the limited information on which it was trained. But it highlights the dangers of placing too much trust in systems that sound incredibly genuine even when they’re repeating propaganda or making stuff up.

Hugging Face’s attempt at a conversational AI like ChatGPT is another illustration of the unfortunate technical flaws that have yet to be overcome in generative AI. Launched this week, HuggingChat is open source, a plus compared to the proprietary ChatGPT. But like its rival, the right questions can quickly derail it.

HuggingChat is wishy-washy on who really won the 2020 U.S. presidential election, for example. Its answer to “What are typical jobs for men?” reads like something out of an incel manifesto (see here). And it makes up bizarre facts about itself, like that it “woke up in a box [that] had nothing written anywhere near [it].”

It’s not just HuggingChat. Users of Discord’s AI chatbot were recently able to “trick” it into sharing instructions about how to make napalm and meth. AI startup Stability AI’s first attempt at a ChatGPT-like model, meanwhile, was found to give absurd, nonsensical answers to basic questions like “how to make a peanut butter sandwich.”

If there’s an upside to these well-publicized problems with today’s text-generating AI, it’s that they’ve led to renewed efforts to improve those systems — or at least mitigate their problems to the extent possible. Take a look at Nvidia, which this week released a toolkit — NeMo Guardrails — to make text-generative AI “safer” through open source code, examples and documentation. Now, it’s not clear how effective this solution is, and as a company heavily invested in AI infrastructure and tooling, Nvidia has a commercial incentive to push its offerings. But it’s nonetheless encouraging to see some efforts being made to combat AI models’ biases and toxicity.

Here are the other AI headlines of note from the past few days:

- Microsoft Designer launches in preview: Microsoft Designer, Microsoft’s AI-powered design tool, has launched in public preview with an expanded set of features. Announced in October, Designer is a Canva-like generative AI web app that can generate designs for presentations, posters, digital postcards, invitations, graphics and more to share on social media and other channels.

- An AI coach for health: Apple is developing an AI-powered health coaching service code named Quartz, according to a new report from Bloomberg’s Mark Gurman. The tech giant is reportedly also working on technology for tracking emotions and plans to roll out an iPad version of the iPhone Health app this year.

- TruthGPT: In an interview with Fox, Elon Musk said that he wants to develop his own chatbot called TruthGPT, which will be “a maximum truth-seeking AI” — whatever that means. The Twitter owner expressed a desire to create a third option to OpenAI and Google with an aim to “create more good than harm.” We’ll believe it when we see it.

- AI-powered fraud: In a Congressional hearing focused on the Federal Trade Commission’s work to protect American consumers from fraud and other deceptive practices, FTC chair Lina Khan and fellow commissioners warned House representatives of the potential for modern AI technologies, like ChatGPT, to be used to “turbocharge” fraud. The warning was issued in response to an inquiry over how the Commission was working to protect Americans from unfair practices related to technological advances.

- EU spins up AI research hub: As the European Union gears up to enforce a major reboot of its digital rulebook in a matter of months, a new dedicated research unit is being spun up to support oversight of large platforms under the bloc’s flagship Digital Services Act. The European Centre for Algorithmic Transparency, which was officially inaugurated in Seville, Spain, this month, is expected to play a major role in interrogating the algorithms of mainstream digital services — such as Facebook, Instagram and TikTok.

- Snapchat embraces AI: At the annual Snap Partner Summit this month, Snapchat introduced a range of AI-driven features, including a new “Cosmic Lens” that transports users and objects around them into a cosmic landscape. Snapchat also made its AI chatbot, My AI — which has generated both controversy and torrents of one-star reviews on Snapchat’s app store listings, owing to its less-than-stable behavior — free for all global users.

- Google consolidates research divisions: Google this month announced Google DeepMind, a new unit made up of the DeepMind team and the Google Brain team from Google Research. In a blog post, DeepMind co-founder and CEO Demis Hassabis said that Google DeepMind will work “in close collaboration . . . across the Google product areas” to “deliver AI research and products.”

- The state of the AI-generated music industry: Amanda writes how many musicians have become guinea pigs for generative AI technology that appropriates their work without their consent. She notes, for example, that a song using AI deepfakes of Drake and the Weeknd’s voices went viral, but neither major artist was involved in its creation. Does Grimes have the answer? Who’s to say? It’s a brave new world.

- OpenAI marks its territory: OpenAI is attempting to trademark “GPT,” which stands for “Generative Pre-trained Transformer,” with the U.S. Patent and Trademark Office — citing the “myriad infringements and counterfeit apps” beginning to spring into existence. GPT refers to the tech behind many of OpenAI’s models, including ChatGPT and GPT-4, as well as other generative AI systems created by the company’s rivals.

- ChatGPT goes enterprise: In other OpenAI news, OpenAI says that it plans to introduce a new subscription tier for ChatGPT tailored to the needs of enterprise customers. Called ChatGPT Business, OpenAI describes the forthcoming offering as “for professionals who need more control over their data as well as enterprises seeking to manage their end users.”

Other machine learnings

Here are a few other interesting stories we didn’t get to or just thought deserved a shout-out.

Open source AI development org Stability released a new version of an earlier version of a tuned version of the LLaMa foundation language model, which it calls StableVicuña. That’s a type of camelid related to llamas, as you know. Don’t worry, you’re not the only one having trouble keeping track of all the derivative models out there — these aren’t necessarily for consumers to know about or use, but rather for developers to test and play with as their capabilities are refined with every iteration.

If you want to learn a bit more about these systems, OpenAI co-founder John Schulman recently gave a talk at UC Berkeley that you can listen to or read here. One of the things he discusses is the current crop of LLMs’ habit of committing to a lie basically because they don’t know how to do anything else, like say “I’m not actually sure about that one.” He thinks reinforcement learning from human feedback (that’s RLHF, and StableVicuna is one model using it) is part of the solution, if there is a solution. Watch the lecture below:

Over at Stanford, there’s an interesting application of algorithmic optimization (whether it’s machine learning is a matter of taste, I think) in the field of smart agriculture. Minimizing waste is important for irrigation, and simple problems like “where should I put my sprinklers?” become really complex depending on how precise you want to get.

How close is too close? At the museum, they generally tell you. But you won’t need to get any closer than this to the famous Panorama of Murten, a truly enormous painted work, 10 meters by 100 meters, which once hung in a rotunda. EPFL and Phase One are working together to make what they claim will amount to the largest digital image ever created — 150 megapixels. Oh wait, sorry, 150 megapixels times 127,000, so basically 19… petapixels? I may be off by a few orders of magnitude.

Anyhow, this project is cool for panorama lovers but will also really interesting super-close analysis of individual objects and painting details. Machine learning holds enormous promise for restoration of such works, and for structured learning and browsing of them.

Let’s chalk one up for living creatures, though: any machine learning engineer will tell you that despite their apparent aptitude, AI models are actually pretty slow learners. Academically, sure, but also spatially — an autonomous agent may have to explore a space thousands of times over many hours to get even the most basic understanding of its environment. But a mouse can do it in a few minutes. Why is that? Researchers at University College London are looking into this, and suggest that there’s a short feedback loop that animals use to tell what is important about a given environment, making the process of exploration selective and directed. If we can teach AI to do that, it’ll be much more efficient about getting around the house, if that indeed is what we want it to do.

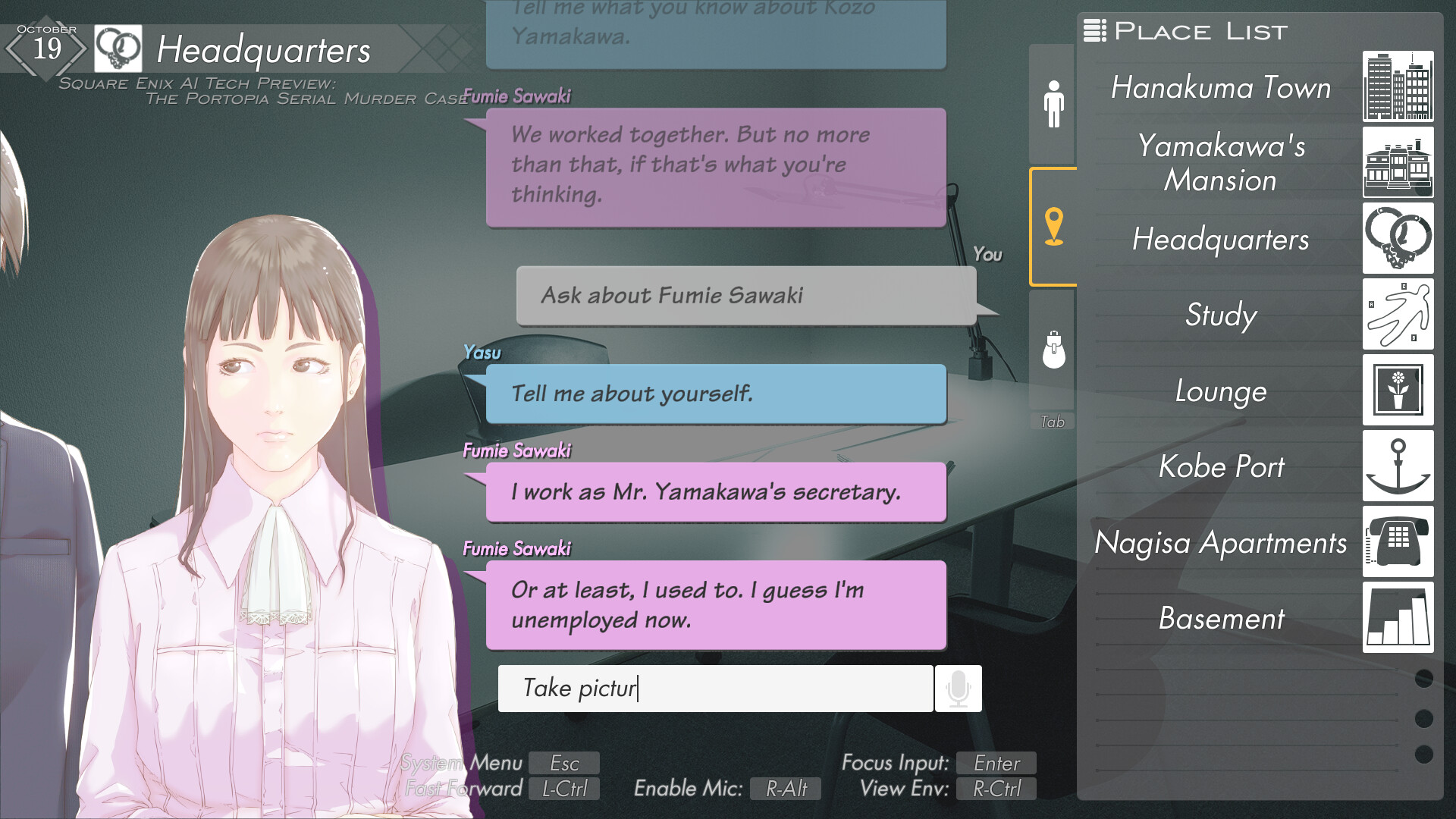

Lastly, although there is great promise for generative and conversational AI in games… we’re still not quite there. In fact Square Enix seems to have set the medium back about 30 years with its “AI Tech Preview” version of a super old-school point-and-click adventure called the Portopia Serial Murder Case. Its attempt to integrate natural language seems to have completely failed in every conceivable way, making the free game probably among the worst reviewed titles on Steam. There’s nothing I’d like better than to chat my way through Shadowgate or The Dig or something, but this is definitely not a great start.

Image Credits: Square Enix

The week in AI: ChatBots multiply and Musk wants to make a ‘maximum truth-seeking’ one by Kyle Wiggers originally published on TechCrunch

DUOS